As an economist and also somebody who loves facts, I never stop beating the drum of sticker prices vs. purchase prices in higher education. Long story short, the dizzying rise in sticker prices (the headline numbers decried by the news media) is mitigated and maybe even reversed when we look at the net price (after accounting for grants and scholarships). Over the past 5 years or so, the former has risen sharply while the latter is basically flat across all schools and declining for private universities. People, even very smart people, almost uniformly ignore net prices when discussing what the rising cost of education means, and especially when talking about its effect on the poor. This is backwards: lots of programs target low-income students in particular

Since smart people with opinions on education policy don’t pay attention to net prices, it’s not surprising that most Americans aren’t aware of their financial aid options. This suggests an obvious policy change: we should inform people of how much financial aid they are eligible to receive. If we do so, the reasoning goes, more of them will go to college, especially on the low end of the scale. Yesterday I saw a talk by Eric Bettinger on the latest results from an experiment designed to test such a policy. Bettinger and coauthors Bridget Terry Long, Philip Oreopoulos, and Lisa Sanbonmatsu convinced with H&R Block to offer a group of their tax services clients either a) information about their financial aid eligibility or b) the same information, along with assistance in completing the FAFSA, which is required for almost all financial aid. At the baseline, the typical person overestimated the net cost of college attendance by a factor of 3.

Option b worked like gangbusters: recipients of the FAFSA assistance were 8 percentage points more likely to attend college, and the effect remains detectable well into their college years. Option a – just information about financial aid eligibility, did precisely nothing. And I do mean precise: Bettinger walked through some of the most impressive zeroes I’ve ever seen in a seminar. In general, Bettinger et al. can rule out effects much bigger than 2 percentage points (with -2 percentage points being about equally likely). During the seminar, Bettinger and Michigan’s own Sue Dynarski mentioned the fact that studies testing other ways of communicating this information find similar null effects.

There’s a lot to like about this paper. First, it’s testing a policy that seems obvious to anybody who’s looked at financial aid. If people are unaware of tons of money sitting on the table, some of them have to grab it when we point it out to them. Right? Wrong. Second, It reaches an important policy conclusion* and advances science based on a “statistically insignifcant” effect. Bettinger took the exact right approach to his estimated zero effects in the talk: he discussed testing them against other null hypotheses, not just zero. This isn’t done often enough. Zero is the default in most statistical packages, but it’s not really a sensible null hypothesis if we think an effect is likely to be zero. When we’re looking at possibly-zero effects, considering the top and bottom of the confidence interval – as Bettinger does – let’s us re-orient our thinking: given the data we’re looking at, what is the largest benefit the treatment could possibly bring? The biggest downside?

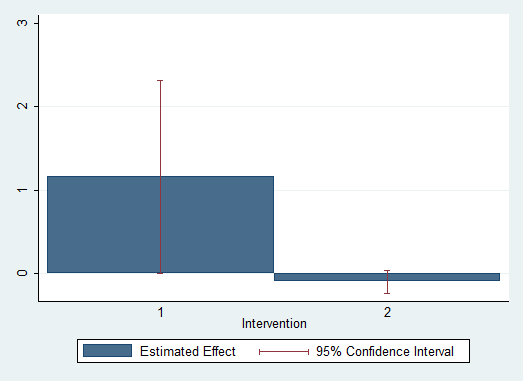

Answering those questions shows us why this is a “good” zero: many statistically insignificant effects are driven by imprecision. They’re positive and pretty big, but the confidence intervals are really wide. The graph above, which I just made with some simulated data, illustrates the difference. On the left, we have a statistically insignificant, badly-measured effect. It could be anywhere from zero to two and a half. The right is a precise zero: the CI doesn’t let us rule out zero (indeed, the effect probably is about zero), but it does let us rule out any effects worth thinking about.